Whether it’s for research purposes, marketing campaigns, or simply to obtain contact information, data scraping has become an essential tool for businesses and individuals alike. But manually extracting data from Google Maps can be a time-consuming and tedious task. This is where web scraping comes in. Web scraping, also known as web harvesting or data scraping, is the automated process of extracting data from websites. In this blog post, we’ll be focusing specifically on how to scrape Google Maps for valuable information. Let’s dive in!

The Importance of Google Maps Data

Google Maps data holds significant value for a wide range of applications, including business analysis, research, and marketing strategies. Its comprehensive and up-to-date information on locations, businesses, and user-generated content enables businesses and researchers to gain valuable insights and make informed decisions.

Accurate and current data is crucial for successful analysis and effective strategy implementation. However, it is essential to understand the scraping process while ensuring compliance with legal and ethical guidelines to maintain integrity and respect the terms of service set by Google.

Understanding Google Maps and Its Data

Google Maps is a widely recognized and extensively used mapping service that offers an array of features and functionalities to its users. With its intuitive interface and comprehensive database, Google Maps has become an indispensable tool for individuals, businesses, and organizations around the world.

At its core, Google Maps provides users with accurate and detailed mapping information, allowing them to navigate through cities, towns, and rural areas with ease.

Users can search for specific addresses, landmarks, or points of interest, and the service provides step-by-step directions for various modes of transportation, including driving, walking, and public transit.

Google Maps offers satellite imagery and Street View, enabling users to explore locations virtually and obtain a realistic visual representation of their surroundings. Street View is particularly beneficial for businesses, as it allows potential customers to take virtual tours of establishments and make informed decisions.

Google Maps also offers a robust and user-friendly mapping service that combines accurate geographical data, interactive features, and user contributions. Its versatility and extensive functionality make it an invaluable resource for individuals, businesses, and researchers seeking location-based information and insights.

Types of Data Available on Google Maps

Following are the three main types of Google Maps data points:

Point of Interest (POI) Data

Google Maps contains a wealth of information about various points of interest, including businesses, landmarks, and addresses. Users can search for specific types of businesses or establishments, such as restaurants, hotels, hospitals, and more.

Each POI listing typically includes details such as contact information, opening hours, website links, and customer reviews.

This data is invaluable for businesses conducting market research, competitive analysis, or location-based targeting for advertising and marketing campaigns.

Ratings, Reviews, and User-generated Content

Google Maps encourages users to share their experiences by leaving ratings, reviews, and other user-generated content. These reviews provide insights into the quality, service, and overall customer satisfaction of businesses.

Users can rate establishments and leave detailed feedback, helping others make informed decisions. For businesses, monitoring and analyzing these reviews can provide valuable feedback for improvement and reputation management.

Geospatial Data

Google Maps incorporates geospatial data, including coordinates and boundaries, to accurately represent locations and geographic areas. Coordinates allow precise identification of specific points on the map, making it useful for navigation, geolocation-based services, and geospatial analysis.

Boundaries, such as city limits, neighborhood boundaries, or geographic regions, enable users to visualize and analyze data within specific areas. Geospatial data plays a vital role in applications such as urban planning, logistics, and location-based services.

Methods of Scraping Google Maps

There are three methods to efficiently scrape Google Maps:

Manual Data Collection

Manual data collection involves gathering data from Google Maps manually, typically by searching for specific information and recording it by hand. This approach requires users to visit the Google Maps website or mobile app, search for desired locations, and manually extract relevant data such as business names, addresses, contact details, and reviews.

Manual collection can be suitable for small-scale projects or when a limited amount of data needs to be gathered. However, it has significant limitations in terms of scalability and efficiency.

Manually collecting large amounts of data can be time-consuming, prone to errors, and not feasible for projects requiring frequent updates.

Web Scraping Techniques

Web scraping is an automated method of data extraction from websites, including Google Maps. It involves using programming techniques to parse and extract relevant information from the HTML structure of web pages.

By utilizing DOM traversal, web scraping enables the extraction of specific elements such as business names, addresses, ratings, reviews, and other data points from Google Maps listings. Various tools and libraries, such as BeautifulSoup, Selenium, and Scrapy, provide functionalities to facilitate web scraping tasks.

Web scraping offers a more efficient and scalable approach compared to manual collection, allowing for the extraction of large volumes of data in a relatively short time.

API-Based Data Extraction

Google Maps also provides an Application Programming Interface (API) that allows developers to access and extract data programmatically. The Google Maps API offers various capabilities, including geocoding, directions, and place searches.

To use the API, developers need to obtain an API key and set up the necessary credentials. API-based data extraction provides a structured and reliable method for retrieving data from Google Maps.

It offers benefits such as real-time data updates, improved data accuracy, and efficient handling of large datasets.

It’s important to note that API usage may have limitations, including usage restrictions, rate limits, and potential costs based on the API plan chosen.

Best Practices for Scraping Google Maps

Now let’s go ahead and explore the best practices for scraping Google Maps.

Respect the Terms of Service and API Usage Policies

Respecting Google’s Terms of Service and API usage policies is extremely important when scraping data from Google Maps. It is essential to review and comply with the guidelines provided by Google for data usage.

This includes understanding any restrictions on scraping activities, usage limits and prohibited actions. Obtaining proper authorization and permissions, if required, is also important to ensure that the scraping process aligns with legal and ethical standards.

Use Rate Limiting and Proxies

Implementing rate limiting is essential to avoid overloading the Google Maps website and potentially causing disruptions. Rate limiting involves controlling the frequency and volume of requests made to the website, ensuring that scraping activities are performed at a reasonable pace.

Using proxies can help mask the IP address of the scraper, preventing IP blocking and enabling anonymous scraping. Proxies act as intermediaries between the scraper and the target website, providing an extra layer of protection and ensuring uninterrupted data extraction.

Handling Captchas and Anti-Scraping Mechanisms

Google Maps implements various anti-scraping measures, including captchas, to prevent automated data extraction. Captchas are designed to distinguish between human users and bots, requiring users to solve visual or interactive challenges to proceed.

When faced with captchas, strategies such as using automated captcha-solving services or implementing captcha-solving algorithms can help bypass these barriers.

Being aware of and adapting to other anti-scraping mechanisms implemented by Google Maps, such as request pattern analysis and session tracking, can contribute to successful scraping.

Data Cleaning and Validation

Data cleaning and validation are essential steps in the scraping process to ensure the accuracy and reliability of the extracted data. Cleaning involves removing duplicates, handling inconsistent or incomplete data, and resolving formatting issues.

Validation techniques can be applied to verify the integrity of the scraped data, such as cross-referencing information with multiple sources or conducting data quality checks.

Top 5 Google Crawlers

1. Leadinary

Leadinary is the ultimate lead generation engine designed specifically to target local businesses. With its extensive range of features, it has become the go-to solution for countless agencies and providers. One of its standout features is the interactive map with listings, powered by the official Google Maps API. Users can simply enter a keyword and select any location worldwide to view real-time results and access valuable data.

Leadinary takes lead generation a step further by crawling websites for additional information. Alongside the data from Google My Business (GMB) listings, it scours websites to gather crucial details. This includes contact information like emails and phone numbers, as well as social media URLs such as Facebook, Twitter, and LinkedIn.

Leadinary extracts website meta information like titles and keywords, providing deeper insights into each business. It even identifies technology-related information, such as the presence of ad pixels for platforms like Facebook Ads and Google Ads, as well as the content management system (CMS) used, be it WordPress or Shopify.

2. Phantom Buster

This software works best for tech savvy individuals who understand how to setup scripts and automation. If you can figure out how to setup the automation, the platform can scrape Google Maps search results.

Take note that Google Maps displays between 140-200 results for each search, so you won’t be able to access any more than this. To get more, make your location search criteria more granular and use multiple different searches within the same wider area.

Furthermore you’ll need to subscribe to Phantom Buster’s monthly plans to take advantage of their Google Maps Scraper. You also will not get all the same additional benefits that Leadinary offers such as saving leads into organized Lists, generating Google Business Profile audit reports and SEO reports, bulk search by location or keyword, and performing local business outreach campaigns.

3. Octoparse

Octoparse is a handy tool that allows non-programmers to scrape data from websites. It’s completely free to use and doesn’t require any coding skills. With Octoparse, you can create web crawlers using a simple drag-and-drop interface. This means you can easily build a step-by-step process to extract the specific information you need from any website.

One of the cool things about Octoparse is that it offers a bunch of pre-built templates specifically designed for scraping data from Google Maps. These templates are ready to use, saving you time and effort. You can choose a template that suits your needs and enter keywords or URLs to start scraping data right away.

4. Open-source Projects on GitHub

There are some projects on GitHub that can help you extract information from Google Maps. One example is a project written in Node.js. Many other people have already created useful projects that you can use, so there’s no need to start from scratch.

Even though you don’t have to write most of the code yourself, you still need to understand the basics and write some code to run the program. This can be challenging for people with little coding knowledge. The amount and quality of the data you collect depend on the open-source project on GitHub, which may not receive regular updates.

The output of the project is limited to a .txt file. If you need a large amount of data, this may not be the best method for obtaining it.

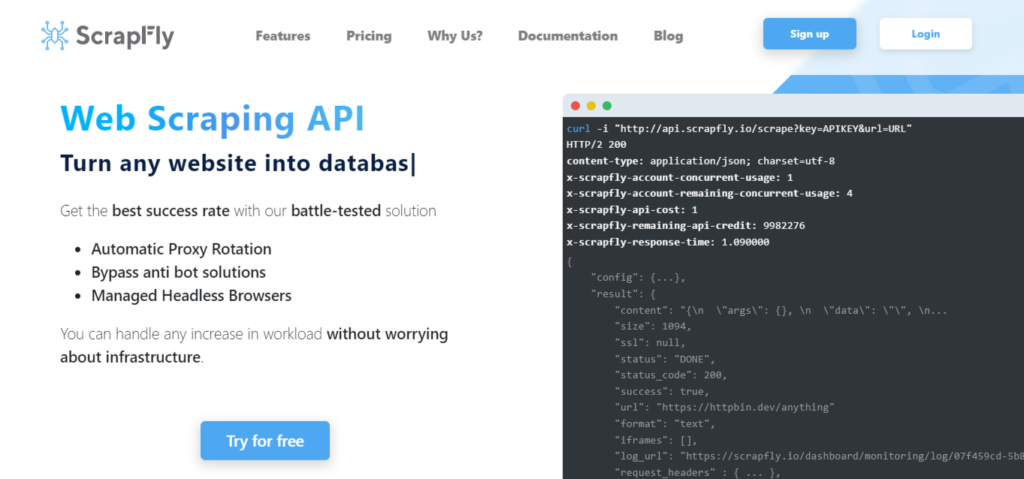

5. Scrapefly

Scrapefly is a framework that helps you download, clean, and store data from web pages. It has pre-written code that saves you time. But remember, building the web crawler and handling everything requires writing code yourself. So, only programmers who are skilled in web scraping can do this project effectively.

You may also like: Top 5 Google Places API Alternatives

Wrapping Up

In a nutshell, web scraping Google Maps can be a real game-changer for businesses seeking to boost their Google My Business listing and dig up intel on their rivals, customers, or potential leads. But hey, remember to play it cool and stay on the right side of the law! Ethical scraping practices are the name of the game to dodge any legal headaches. Plus, don’t overlook the hurdles and snags that come hand in hand with scraping—getting blocked or ending up with wonky data can be a real bummer.